How distributed training works in Pytorch: distributed data-parallel and mixed-precision training | AI Summer

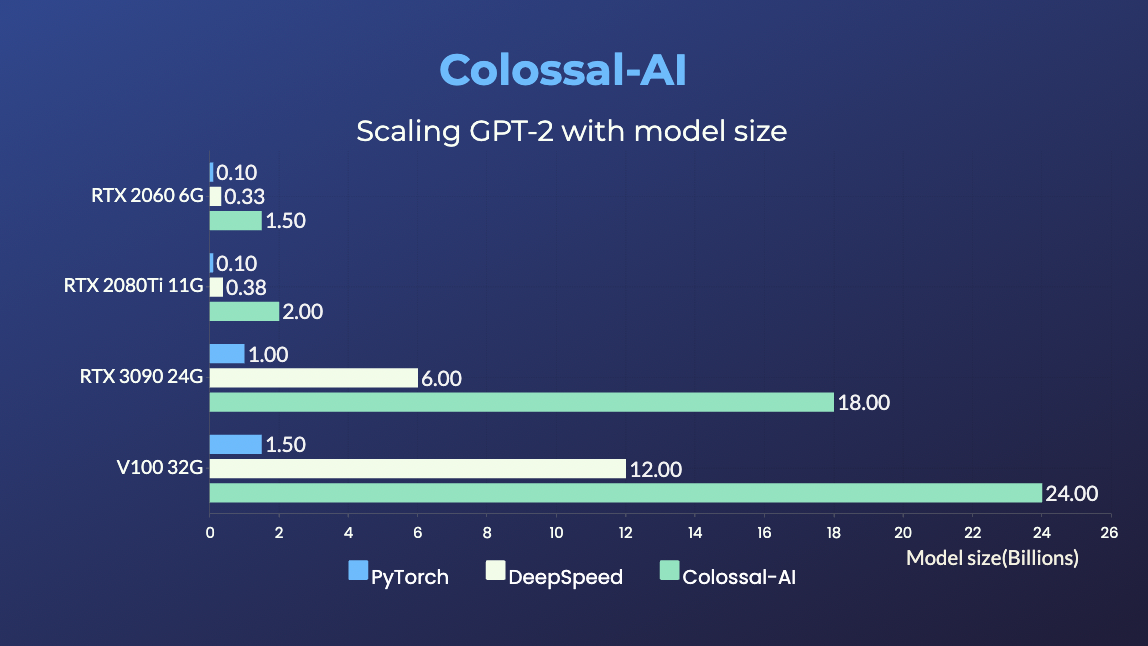

Train 18-billion-parameter GPT models with a single GPU on your personal computer! Open source project Colossal-AI has added new features! | by HPC-AI Tech | Medium